How a Top Biopharma Cut Data Processing Time from 24 Hours to 1

Over a decade ago, an acquisition made this biopharma part of one of the largest global life sciences companies. The company continued to thrive under its new owner, reaching the point where it needed a data warehouse solution. “We faced the classic ‘build-or-buy’ dilemma. At the time, master data management (MDM) solutions — especially in pharma — were not very mature, so it made sense to custom-build,” the company’s IT director says.

The team used its home-grown platform to pull data from multiple sources into one repository to clean, organize, and prepare it for analytics and storage. However, as time passed, they saw many reasons to adopt a multi-domain MDM: Data needs and sources ballooned as teams and territories grew. Predicting the long-term effort and cost of maintaining the platform was getting harder.

“We’re still growing. Data changes all the time as we adopt new targets and geographies. What started out well slowly became difficult to maintain,” the IT director says.

More data sources, less certainty about quality and speed

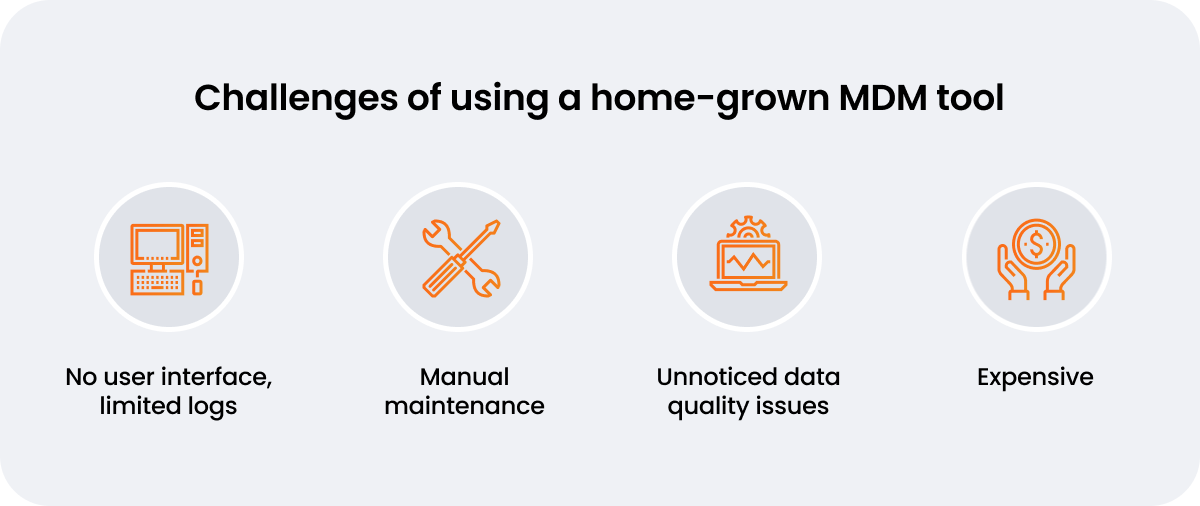

“While we’re relatively small in size, our brands cover a range of indications, which creates challenges when maintaining data with a small but very skilled team,” the IT director says. Despite his team’s best efforts using their data warehouse solution, data wasn’t meeting their high quality and timeliness standards, maintenance was expensive yet still partially manual, and the interface wasn’t user-friendly.

Additional management difficulties resulted from:

- Hard-coded rules that prohibited updates and upgrades

- An inability for users to self-serve

- Low data quality that went unnoticed

- Difficulty integrating additional sources

The IT team knew that operational effectiveness and trust could quickly erode when sales, marketing, and medical teams lack the data they need to do their jobs. They took a step back to consider what was important to the company — achieving greater quality, speed, and scalability in serving customers — and pivoted to a new strategy for MDM.

The biopharma’s MDM administrator also recalls the hurdles he faced when reconciling multiple data sources in the custom MDM. “Each has its standards, data layouts, and challenges, such as bad data and null values. Some vendors just forgot to put in an address or provide an NPI. Sometimes, we had wrong licenses from different vendors,” he says.

In addition, IT resources became stretched thin when the team received ad hoc requests, partly because users couldn’t self-serve for simple requests. Finally, the traditional scheduling of data processing jobs in the custom solution was problematic. A single, large job running once daily would cause data to queue up. “Our business would have to wait up to 24 hours before seeing the data. We needed a better way,” the MDM administrator says.

A solution to keep pace with growth

A decade after building the custom data warehouse, the IT team made a switch to ensure internal customers could access the high-quality data they needed. “Our solution was to use Veeva Network to help us master the data from different sources with different layouts into what we call the golden record,” the network administrator says.

“We looked at the pace of innovation at the company we’d be partnering with. Ultimately, this led to our selection of Veeva Network.” – IT Director at a top biopharma

Once the company decided it was time to evaluate requirements for a purpose-built MDM solution, they explored multiple options. “We looked at the quality of the master data standard integrations, usability, features, cost, and, importantly, the pace of innovation at the company we’d be partnering with. Ultimately, this led to our selection of Veeva Network and Veeva OpenData,” the IT director says.

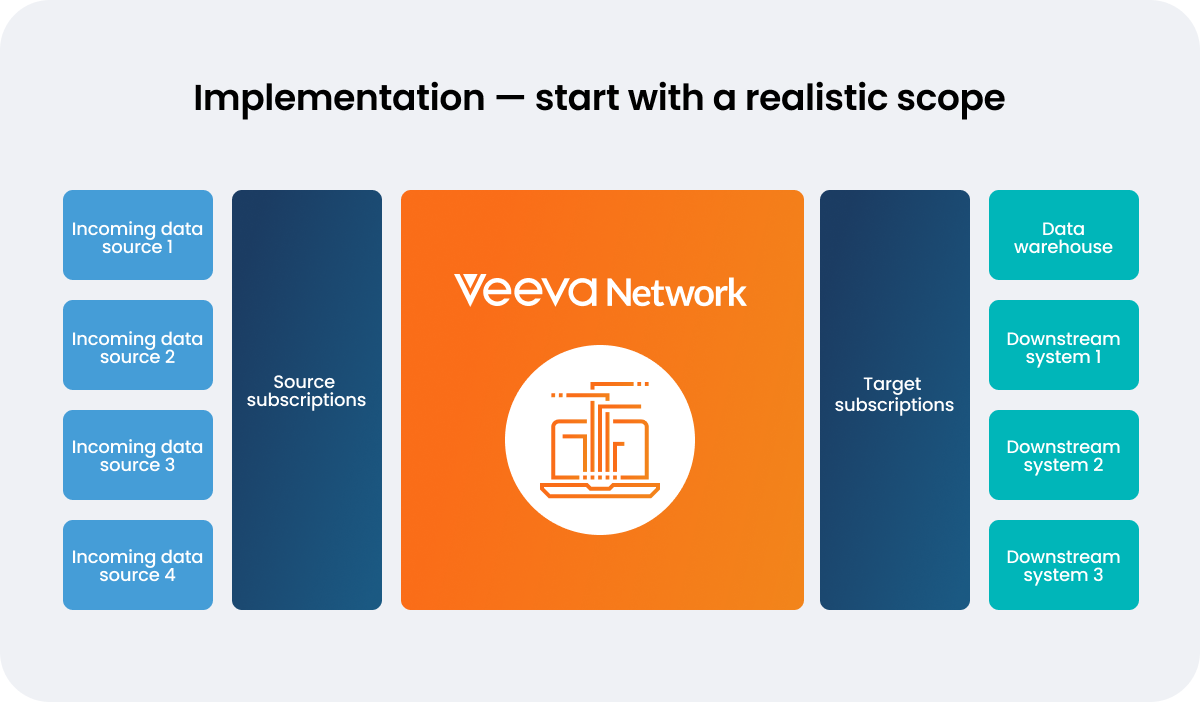

When adding data sources to the MDM, the team kept it simple at first, focusing on key sources and targets, and proceeded to add more.

A leap forward in data quality and speed

The MDM administrator says that with Veeva Network and OpenData, the IT team runs event-driven data processing jobs as opposed to the old method of traditional scheduling. “As our ETL processes run, a connection is made to the Veeva Network API to kick off source and target subscriptions in real time.” Business users are extremely pleased with data change requests in an hour or less, down from a day or more.

Developing match rules by source in Veeva Network also enables IT to improve data quality in downstream systems. “Our business needs to trust in the golden customer record, and it lends credibility that we’re constantly trying to improve data quality. It’s not a one-and-done process,” the MDM administrator says. To maintain quality, the team uses tools in Veeva Network to:

- Review match rules

- Rank data by source

- Create KPIs for match rates

Finally, IT has been able to respond to the business need of a custom view of health system hierarchies, through the use of Hierarchy Explorer in Network. “Once we built the hierarchy, we needed a business-friendly way to display it, showing how HCPs roll up to healthcare organizations. That’s where the business portal comes in,” the MDM administrator says.

For others considering the switch to Veeva Network, the IT director says, “I recommend spending a lot of time analyzing your source data and running iteration after iteration to lower the volume of work for your data stewards. We worked with Veeva Network Professional Services to fine-tune our match rules, allowing us to understand the tool better and grow into it. We made a huge leap forward with Veeva Network.”

Do you have the right data to engage with customers faster and more effectively? Learn more.