Blog

Gaining Efficiencies in the Monitoring Visit Report Process

Dec 11, 2019 | Veeva

Dec 11, 2019 | Veeva

In a previous post, we explored how the use of monitoring reviewer comments in Vault CTMS improves monitoring efficiency. In this post, Ora discusses how they are using the feature to optimise trial processes.

Ora, Inc. is a full-service research organisation specialising in pre-clinical and clinical ophthalmic drug and device development. Monitoring is one of our largest groups with the greatest number of users, so streamlining processes and reducing costs is critical.

We continue to optimise our business processes and innovate with Vault CTMS by adopting new features as soon as Veeva releases them. We implemented monitoring reviewer comments in our Vault two days after the feature was released in May 2019. Our goal was to streamline the 60-80 monitoring events we have each month. Since going live with the feature, CRAs have added over 3,000 reviewer comments, which prompted us to do a deeper analysis of the comments.

Deep Dive: Ora’s Experience & Lessons Learned with Monitoring Reviewer Comments

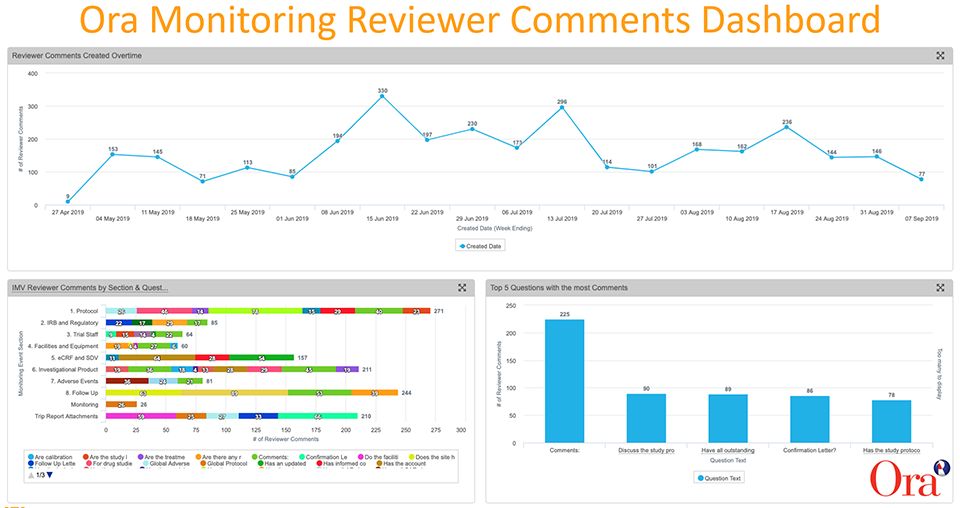

Monitoring reviewer comments are captured as reportable data in Vault CTMS and configurable dashboards give us the ability to visualise trends and drill deeper into the data. We created dashboards that show at-a-glance KPIs, including:

- The number of reviewer comments over time

- The top five questions with the most comments

- IMV (interim monitoring visit) reviewer comments by monitoring event section (e.g, protocol, IRB and regulatory, facilities and equipment, eCRF and SDV, etc.)

The analysis of the data allowed us to identify trends, spot issues, and take action.

1. FINDING: Reviewer comments were significantly higher for site evaluation visits (SEVs).

SEVs have an average of 32 comments per monitoring event, compared to IMVs with an average of 8.8 comments.

ACTION: Investigate comments logged on SEVs to determine why there are 4x as many comments on those reports compared to IMVs.

We are currently analysing the data to determine if the high number of comments are a result of CRAs receiving new information about sites, if they need refresher training, or if the SEV questions need to be simplified. Once the root cause is identified, we can take data-driven action.

2. FINDING: Open-ended questions (where users can type in their response) and longer questions have the highest number of comments.

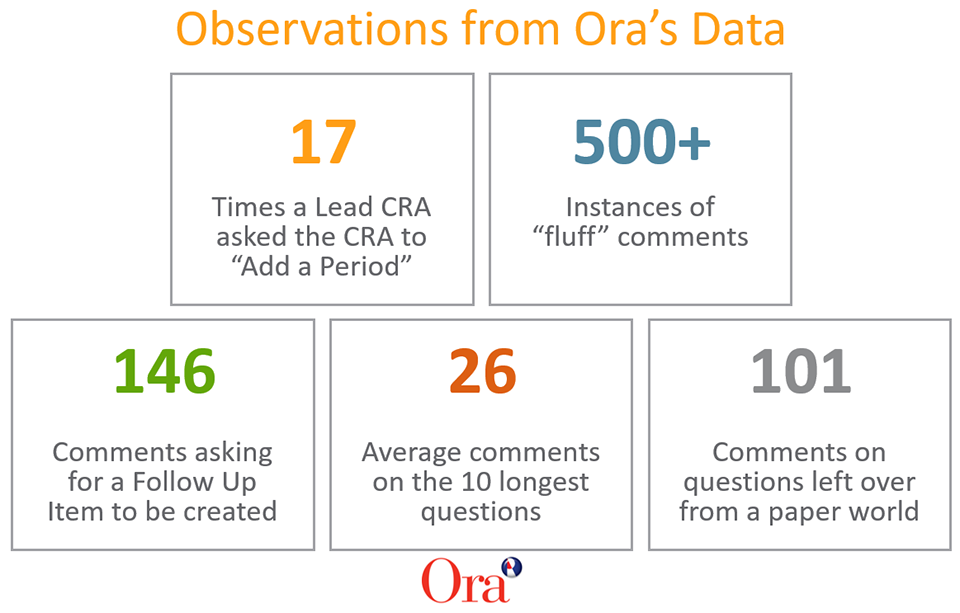

We have a question called “Comments?” on our Vault monitoring events, where CRAs can type any response. This question had the highest number of review comments (180+ responses). We also found questions that exceed 100 characters tend to be more confusing for CRAs, as we suspect they are unsure what should be populated in the field.

ACTION: Restructure the questions.

We will reduce the number of open-ended questions, and also cut down the character count, as the data shows that shorter, succinct questions have fewer comments.

3. FINDING: 17% of comments were responses such as “ok” or “resolved.”

We have 500+ instances of “fluff” comments. For example, a study manager may ask a CRA to update a protocol deviation, and the CRA responds to the comment with “ok” or “resolved,” then resolves both reviewer comments.

ACTION: Retrain CRAs on the correct process for resolving monitoring reviewer comments.

CRAs will have a refresher course so they know they can resolve the comment, rather than reply.

4. FINDING: Some reviewer comments are approved after the required 10 days.

Ora’s compliance procedures require that monitoring events are approved within 10 days. Most comments are resolved within the first three days. However, we found instances where comments were resolved after 10 days (and thus monitoring events were approved after our 10-day SLA).

ACTION: View weekly dashboard to ensure we are compliant with company policies.

We are closely monitoring when reviewer comments aren’t resolved within the required timeline to determine if there are deeper issues that require corrective action.

5. FINDING: Latent questions from previous paper-based trip reports are still active.

These are questions left over from our days of paper trip reports. However, Vault CTMS automatically generates confirmation and follow-up letters and relates those documents to the monitoring event. This duplicates data, which we found is confusing to CRAs who must answer a question that is automatically populated by the field on the monitoring event.

ACTION: Remove redundant questions from monitoring events.

Our aim is to alleviate confusion and speed the process of completing the form.

We look forward to taking action on these patterns and continuing to streamline and optimise trial management with Vault CTMS. Watch this 13-minute video to learn more about our experience with monitoring reviewer comments.